Get the latest Microsoft MCSA SQL 2016 70-765 dumps exam practice files in PDF format download free try from leads4pass. Newest Microsoft MCSA SQL 2016 70-765 dumps vce youtube demo free update. “Provisioning SQL Databases” is the name of Microsoft MCSA SQL 2016 https://www.leads4pass.com/70-765.html exam dumps which covers all the knowledge points of the real Microsoft exam. High quality Microsoft MCSA SQL 2016 70-765 dumps pdf training resources and study guides free download. It is the best choice for you to pass Microsoft 70-765 exam.

Latest Microsoft 70-765 dumps pdf practice files: https://drive.google.com/open?id=0B_7qiYkH83VRSzdtSnprR090aVk

Latest Microsoft 70-767 dumps pdf practice files: https://drive.google.com/open?id=0B_7qiYkH83VROUZYeUJKbEFKd2M

Vendor: Microsoft

Certifications: MCSA SQL 2016

Exam Name: Provisioning SQL Databases

Exam Code: 70-765

Total Questions: 149 Q&As

Useful Microsoft MCSA SQL 2016 70-765 Dumps Exam Practice Questions And Answers (1-20)

QUESTION 1

You are a database administrator for a Microsoft SQL Server 2014 environment.

You want to deploy a new application that will scale out the workload to at least five different SQL Server instances.

You need to ensure that for each copy of the database, users are able to read and write data that will then be synchronized between all of the database instances.

Which feature should you use?

A. Database Mirroring

B. Peer-to-Peer Replication

C. Log Shipping

D. Availability Groups

Correct Answer: B

Explanation:

Peer-to-peer replication provides a scale-out and high-availability solution by maintaining copies of data across multiple server instances, also referred to as nodes. Built on the foundation of transactional replication, peer-to-peer replication propagates transactionally consistent changes in near real-time. This enables applications that require scale-out of read operations to distribute the reads from clients across multiple nodes. Because data is maintained across the nodes in near real-time, peer-to-peer replication provides data redundancy, which increases the availability of data.

QUESTION 2

You develop a Microsoft SQL Server 2014 database that contains a heap named OrdersHistorical.

You write the following Transact-SQL query:

INSERT INTO OrdersHistorical

SELECT * FROM CompletedOrders

You need to optimize transaction logging and locking for the statement.

Which table hint should you use?

A. HOLDLOCK

B. ROWLOCK

C. XLOCK

D. UPDLOCK

E. TABLOCK

Correct Answer: E

Explanation:

When importing data into a heap by using the INSERT INTO SELECT FROM statement, you can enable optimized logging and locking for the statement by specifying the TABLOCK hint for the target table.

QUESTION 3

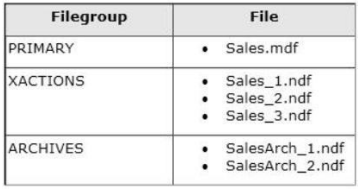

You administer a Microsoft SQL Server database named Sales. The database is 3 terabytes in size.

The Sales database is configured as shown in the following table.

You discover that all files except Sales_2.ndf are corrupt.

You need to recover the corrupted data in the minimum amount of time.

What should you do?

A. Perform a file restore.

B. Perform a transaction log restore.

C. Perform a restore from a full backup.

D. Perform a filegroup restore.

Correct Answer: A

Explanation:

In a file restore, the goal is to restore one or more damaged files without restoring the whole database.

QUESTION 4

You have a SQL Server 2016 database named DB1.

You plan to import a large number of records from a SQL Azure database to DB1.

You need to recommend a solution to minimize the amount of space used in the transaction log during the import operation.

What should you include in the recommendation?

A. The bulk-logged recovery model

B. The full recovery model

C. A new partitioned table

D. A new log file

E. A new file group

Correct Answer: A

Explanation:

Compared to the full recovery model, which fully logs all transactions, the bulk-logged recovery model minimally logs bulk operations, although fully logging other transactions. The bulk-logged recovery model protects against media failure and, for bulk operations, provides the best performance and least log space usage.

Note: The bulk-logged recovery model is a special-purpose recovery model that should be used only intermittently to improve the performance of certain large-scale bulk operations, such as bulk imports of large amounts of data.

QUESTION 5

You plan to deploy an on-premises SQL Server 2014 database to Azure SQL Database.

You have the following requirements:

Maximum database size of 500 GB

A point-in-time-restore of 35 days

Maximum database transaction units (DTUs) of 500

You need to choose the correct service tier and performance level. Which service tier should you choose?

A. Standard S3

B. Premium P4

C. Standard SO

D. Basic

Correct Answer: B

Explanation:

You should choose Premium P4. The Premium tier is the highest Azure SQL Database tier offered. This tier is used for databases and application that require the highest level of performance and recovery. 70-765 dumps The P4 level supports a maximum of 500 DTUs, a maximum database size of 500 GB, and a point-in-time-restore to anypoint in the last 35 days.

QUESTION 6

You administer a single server that contains a Microsoft SQL Server 2014 default instance. You plan to install a new application that requires the deployment of a database on the server. The application login requires sysadmin permissions.

You need to ensure that the application login is unable to access other production databases.

What should you do?

A. Use the SQL Server default instance and configure an affinity mask.

B. Install a new named SQL Server instance on the server.

C. Use the SQL Server default instance and enable Contained Databases.

D. Install a new default SQL Server instance on the server.

Correct Answer: B

QUESTION 7

You manage an on-premises Microsoft SQL server that has a database named DB1.

An application named App1 retrieves customer information for DB1.

Users report that App1 takes an unacceptably long time to retrieve customer records.

You need to find queries that take longer than 400 ms to run.

Which statement should you execute?

A. Option A

B. Option B

C. Option C

D. Option D

Correct Answer: B

Explanation:

Total_worker_time: Total amount of CPU time, reported in microseconds (but only accurate to milliseconds), that was consumed by executions of this plan since it was compiled.

QUESTION 8

You administer a Microsoft SQL Server 2014 server. The MSSQLSERVER service uses a domain account named CONTOSO\SQLService.

You plan to configure Instant File Initialization.

You need to ensure that Data File Autogrow operations use Instant File Initialization.

What should you do? Choose all that apply.

A. Restart the SQL Server Agent Service.

B. Disable snapshot isolation.

C. Restart the SQL Server Service.

D. Add the CONTOSO\SQLService account to the Perform Volume Maintenance Tasks local security policy.

E. Add the CONTOSO\SQLService account to the Server Operators fixed server role.

F. Enable snapshot isolation.

Correct Answer: CD

QUESTION 9

You manage a Microsoft SQL Server environment in a Microsoft Azure virtual machine.

You must enable Always Encrypted for columns in a database.

You need to configure the key store provider.

What should you do?

A. Use the Randomized encryption type

B. Modify the connection string for applications.

C. Auto-generate a column master key.

D. Use the Azure Key Vault.

Correct Answer: D

Explanation:

There are two high-level categories of key stores to consider – Local Key Stores, and Centralized Key Stores.

Centralized Key Stores – serve applications on multiple computers. An example of a centralized key store is Azure Key Vault.

Local Key Stores

QUESTION 10

You administer a Microsoft SQL Server 2014 database.

You need to ensure that the size of the transaction log file does not exceed 2 GB.

What should you do?

A. Execute sp_configure ‘max log size’, 2G.

B. use the ALTER DATABASE…SET LOGFILE command along with the maxsize parameter.

C. In SQL Server Management Studio, right-click the instance and select Database Settings. Set the maximum size of the file for the transaction log.

D. in SQL Server Management Studio, right-click the database, select Properties, and then click Files. Open the Transaction log Autogrowth window and set the maximum size of the file.

Correct Answer: B

Explanation:

You can use the ALTER DATABASE (Transact-SQL) statement to manage the growth of a transaction log file

To control the maximum the size of a log file in KB, MB, GB, and TB units or to set growth to UNLIMITED, use the MAXSIZE option. However, there is no SET LOGFILE subcommand.

QUESTION 11

You plan to install Microsoft SQL Server 2014 for a web hosting company.

The company plans to host multiple web sites, each supported by a SQL Server database.

You need to select an edition of SQL Server that features backup compression of databases, basic data integration features, and low total cost of ownership.

Which edition should you choose?

A. Express Edition with Tools

B. Standard Edition

C. Web Edition

D. Express Edition with Advanced Services

Correct Answer: B

QUESTION 12

You are the database administrator for your company. Your company has one main office and two branch offices. You plan to create three databases named DB1, DB2, and DB3 that will be hosted on one Azure SQL Database server. You have the following requirements:

The main office must be able to connect to all three databases. The branch offices must be able to connect to DB2 and DB3. The branch offices must not be able to access DB1.

You need to configure transparent data encryption (TDE) for DB1. Which two actions should you perform?

Each correct answer presents part of the solution.

A. Run CREATE CERTIFICATE certl WITH Subject = TDE Cert1 on DB1.

B. Connect to DB1.

C. Run ALTER DATABASE DB1 SET ENCRYPTION ON;.

D. Connect to the master database.

E. Run CREATE MASTER KEY on the master database.

Correct Answer: BC

Explanation:

You should connect to DB1. To encrypt DB1, you connect directly to DB1. When you connect to DB1.

You use your dbmanager or administrative credentials. You should run ALTER DATABASE DB1 SET ENCRYPTION ON. You use the ALTER DATABASE DB1 SET ENCRYPTION ON statement to encrypt the database. This is the statement that turns on TDE for Azure SQL Database.

QUESTION 13

You create a new Microsoft Azure subscription.

You need to create a group of Azure SQL databases that share resources.

Which cmdlet should you run first?

A. New-AzureRmAvailabilitySet

B. New-AzureRmLoadBalancer

C. New-AzureRmSqlDatabaseSecondary

D. New-AzureRmSqlElasticPoolE. New-AzureRmVM

E. New-AzureRmSqlServer

F. New-AzureRmSqlDatabaseCopy

G. New-AzureRmSqlServerCommunicationLink

Correct Answer: D

Explanation:

SQL Database elastic pools are a simple, cost-effective solution for managing and scaling multiple databases that have varying and unpredictable usage demands. The databases in an elastic pool are on a single Azure SQL Database server and share a set number of resources (elastic Database Transaction Units (eDTUs)) at a set price. Elastic pools in Azure SQL Database enable SaaS developers to optimize the price performance for a group of databases within a prescribed budget while delivering performance elasticity for each database.

QUESTION 14

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this sections, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are migrating an on-premises Microsoft SQL Server instance to SQL Server on a Microsoft Azure virtual machine. The instance has 30 databased that consume a total of 2 TB of disk space.

The instance sustains more than 30,000 transactions per second.

You need to provision storage for the virtual machine. The storage must be able to support the same load as the on-premises deployment.

Solution: You create one storage account that has 30 containers. You create a VHD in each container.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

Explanation:

Each Storage Account handles up to 20.000 IOPS, and 500TB of data.

QUESTION 15

You have a database named DB1 that uses simple recovery mode.

Full backups of DB1 are taken daily and DB1 is checked for corruption before each backup.

There was no corruption when the last backup was complete.

You run the sys.columns catalog view and discover corrupt pages.

You need to recover the database. The solution must minimize data loss.

What should you do?

A. Run RESTORE DATABASE WITH RECOVERY.

B. Run RESTORE DATABASE WITH PAGE.

C. Run DBCC CHECKDB and specify the REPAIR_ALLOW_DATA_LOSS parameter.

D. Run DBCC CHECKDB and specify the REPAIT_REBUILD parameter.

Correct Answer: B

Explanation:

A page restore is intended for repairing isolated damaged pages. Restoring and recovering a few individual pages might be faster than a file restore, reducing the amount of data that is offline during a restore operation.

RESTORE DATABASE WITH PAGE

Restores individual pages. Page restore is available only under the full and bulk-logged recovery models.

QUESTION 16

You administer a Microsoft SQL Server 2014 database.

You have a SQL Server Agent job instance that runs using the service account. You have a job step within the job that requires elevated privileges.

You need to ensure that the job step can run using a different user account.

What should you use?

A. a schedule

B. an alert

C. an operator

D. a proxy

Correct Answer: D

Explanation:

A SQL Server Agent proxy defines the security context for a job step. A proxy provides SQL Server Agent with access to the security credentials for a Microsoft Windows user. Each proxy can be associated with one or more subsystems. A job step that uses the proxy can access the specified subsystems by using the security context of the Windows user. Before SQL Server Agent runs a job step that uses a proxy, SQL Server Agent impersonates the credentials defined in the proxy, and then runs the job step by using that security context.

QUESTION 17

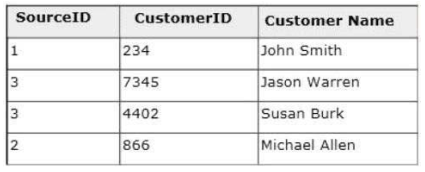

You are a database developer of a Microsoft SQL Server 2014 database. You are designing a table that will store Customer data from different sources. 70-765 dumps The table will include a column that contains the CustomerID from the source system and a column that contains the SourceID. A sample of this data is as shown in the following table.

You need to ensure that the table has no duplicate CustomerID within a SourceID. You also need to ensure that the data in the table is in the order of SourceID and then CustomerID. Which Transact- SQL statement should you use?

A. CREATE TABLE Customer(SourceID int NOT NULL IDENTITY,CustomerID int NOT NULL IDENTITY,CustomerName varchar(255) NOT NULL);

B. CREATE TABLE Customer(SourceID int NOT NULL,CustomerID int NOT NULL PRIMARY KEY CLUSTERED,CustomerName varchar(255) NOT NULL);

C. CREATE TABLE Customer(SourceID int NOT NULL PRIMARY KEY CLUSTERED,CustomerID int NOT NULL UNIQUE,CustomerName varchar(255) NOT NULL);

D. CREATE TABLE Customer(SourceID int NOT NULL,CustomerID int NOT NULL,CustomerName varchar(255) NOT NULL,CONSTRAINT PK_Customer PRIMARY KEY CLUSTERED(SourceID, CustomerID));

Correct Answer: D

QUESTION 18

You administer a Microsoft SQL Server 2014 failover cluster.

You need to ensure that a failover occurs when the server diagnostics returns query_processing error.

Which server configuration property should you set?

A. SqlOumperDumpFlags

B. FailureConditionLevel

C. HealthCheckTimeout

D. SqlDumperDumpPath

Correct Answer: B

Explanation:

Use the FailureConditionLevel property to set the conditions for the Always On Failover Cluster Instance (FCI) to fail over or restart.

The failure conditions are set on an increasing scale. For levels 1-5, each level includes all the conditions from the previous levels in addition to its own conditions.

Note: The system stored procedure sp_server_diagnostics periodically collects component diagnostics on the SQL instance. The diagnostic information that is collected is surfaced as a row for each of the following components and passed to the calling thread. The system, resource, and query process components are used for failure detection. The io_subsytem and events components are used for diagnostic purposes only.

QUESTION 19

You administer a Microsoft SQL Server 2014.

A process that normally runs in less than 10 seconds has been running for more than an hour.

You examine the application log and discover that the process is using session ID 60.

You need to find out whether the process is being blocked.

Which Transact-SQL statement should you use?

A. EXEC sp_who 60

B. SELECT * FROM sys.dm_exec_sessions WHERE sessionid = 60

C. EXEC sp_helpdb 60

D. DBCC INPUTBUFFER (60)

Correct Answer: A

Explanation:

sp_who provides information about current users, sessions, and processes in an instance of the Microsoft SQL Server Database Engine. The information can be filtered to return only those processes that are not idle, that belong to a specific user, or that belong to a specific session.

Example: Displaying a specific process identified by a session ID EXEC sp_who ’10’ –specifies the process_id;

QUESTION 20

You administer a Microsoft SQL Server 2014 database that includes a table named Application.Events. Application.Events contains millions of records about user activity in an application.

Records in Application.Events that are more than 90 days old are purged nightly. When records are purged, table locks are causing contention with inserts.

You need to be able to modify Application.Events without requiring any changes to the applications that utilize Application.Events.

Which type of solution should you use?

A. Partitioned tables

B. Online index rebuild

C. Change data capture

D. Change tracking

Correct Answer: A

Explanation:

Partitioning large tables or indexes can have manageability and performance benefits including:

You can perform maintenance operations on one or more partitions more quickly. The operations are more efficient because they target only these data subsets, instead of the whole table.

Why Select leads4pass?

leads4pass is the best provider of IT learning materials and the right choice for you to prepare for Microsoft 70-765 exam. Other brands started earlier, but the price is relatively expensive and the questions are not the newest. leads4pass provide the latest real questions and answers with lowest prices, help you pass Microsoft 70-765 exam easily at first try.

Prepare for Microsoft 70-765 exam with latest Microsoft MCSA SQL 2016 70-765 dumps exam questions and answers free download from leads4pass. High quality Microsoft MCSA SQL 2016 https://www.leads4pass.com/70-765.html dumps pdf training resources which are the best for clearing 70-765 exam test, and to get certified by Microsoft MCSA SQL 2016. 100% success and guarantee to pass Microsoft 70-765 exam easily.

Best Microsoft MCSA SQL 2016 70-765 dumps vce youtube:

https://youtu.be/Hm0bykcMxtM

What Our Customers Are Saying:

You can click here to have a review about us: https://www.resellerratings.com/store/leads4pass